Your CEO just announced the company’s AI-first strategy and the product team is shipping AI features faster than ever. Marketing is promising intelligent automation to customers, while the QA team is left wondering how to actually test this stuff.

Every QA team is grappling with the same challenge as AI becomes the default solution for everything from customer service to content generation.

1. The AI QA Challenge

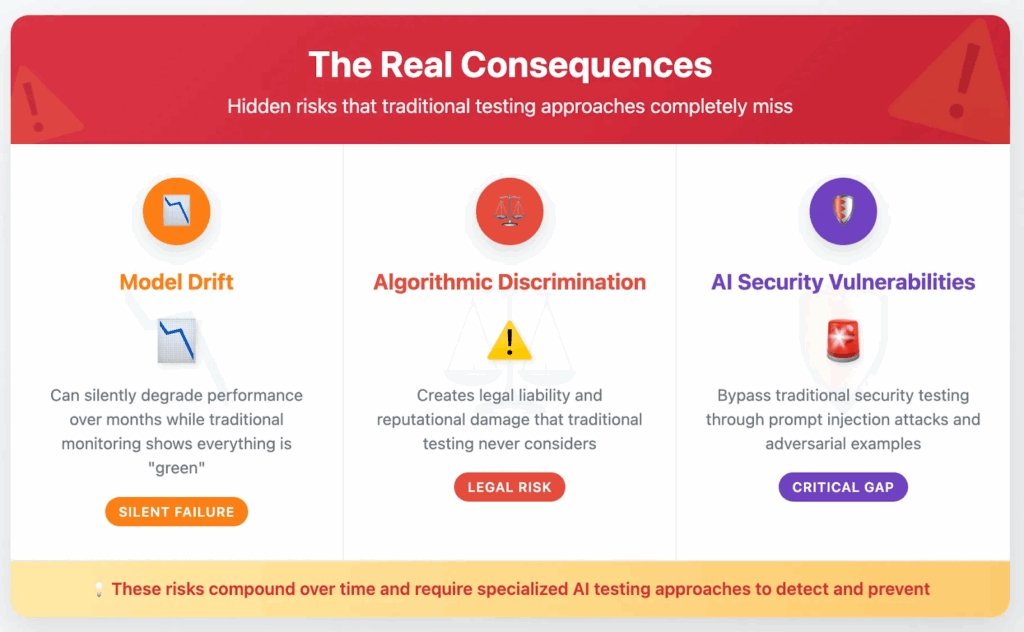

Imagine a situation where all your regression tests pass, and your performance tests show green, but users are getting made-up information instead of accurate responses. Your edge case coverage looks comprehensive, but your chatbot just recommended an unverified phishing link.

This isn’t a failure of QA frameworks. It reveals how AI systems challenge the fundamental assumptions underlying existing testing approaches.

Most QA teams are still trying to test AI systems using frameworks designed for deterministic software.

AI systems violate three core assumptions that non-AI testing relies on:

- Deterministic Behavior: Expecting identical outputs for identical inputs will cause most AI tests to fail incorrectly. A language model given the same prompt twice might generate different but correct responses. Automation frameworks need to evolve from exact matching to semantic similarity evaluation.

- Static System Boundaries: AI systems continuously evolve as they encounter new data and learn from user feedback. A sentiment analysis model might maintain the same accuracy score while developing bias against specific demographic groups. Regression testing needs statistical validation, not just output comparison.

Predictable Failure Modes: AI systems fail in novel ways standard testing doesn’t anticipate. They make up believable-sounding information, reflect past biases, and can get worse over time as data changes from what they were trained on.

The solution is to evolve testing approaches. Skills in test planning, automation, and process management transfer directly to AI QA. What changes is the focus: validating learned behaviors instead of testing predetermined logic paths, working with statistical confidence intervals instead of binary pass/fail criteria, and implementing continuous monitoring for system drift instead of static regression testing.

This whitepaper shows you exactly how to make this evolution successfully.

2. The AI QA Framework

Standard testing frameworks excel at validating that software follows programmed logic: given input X, the system produces output Y. AI systems learn behavior from data patterns rather than following explicit programming. They’re probabilistic by design, making different decisions based on confidence levels, randomness parameters, and learned associations.

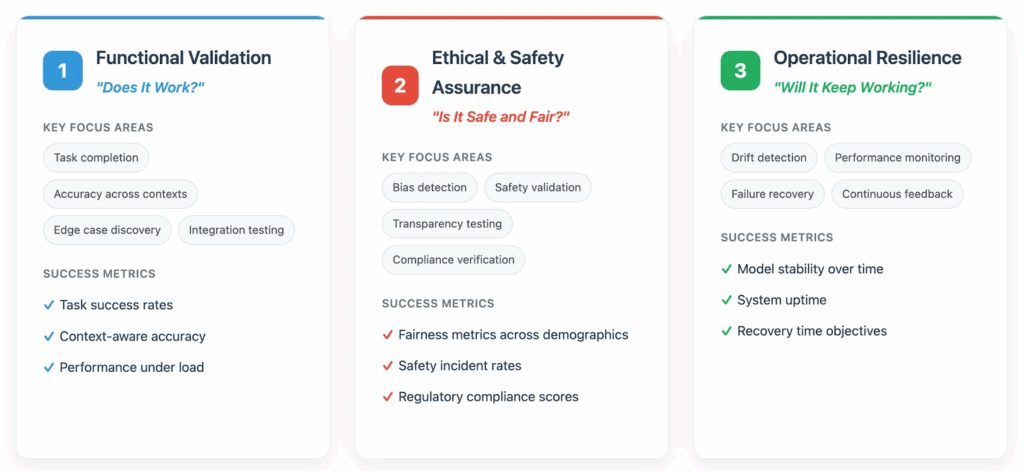

Effective AI QA requires comprehensive coverage across three critical dimensions:

Understanding AI Application Types

Different AI implementations require different testing approaches:

- AI-Enhanced Features: Traditional apps with AI components (smart search, recommendations)

- AI-First Applications: Core functionality depends on AI (chatbots, content generators)

- AI Workflows: Multi-step processes using AI (automated testing, data analysis)

- AI Agents: Autonomous systems making decisions (trading bots, monitoring systems)

Each type has distinct testing requirements and risk profiles that inform your testing strategy.

3. Functional Validation – “Does It Work?”

Functional validation for AI systems requires a fundamental shift from testing predetermined logic to evaluating learned behaviors. The core challenge is ensuring AI systems complete user tasks successfully despite their probabilistic nature.

Testing Across AI Implementation Types

Different AI implementations require tailored functional testing approaches:

| Implementation | Description | Testing Focus |

| AI-Enhanced Features | Search suggestions, smart filters | Integration points between AI and standard workflows. Test task completion rates and graceful degradation when AI is unavailable. Validate real user outcome improvements. |

| AI-First Applications | Chatbots, content generators | End-to-end conversation flows and content quality. Test multi-turn interactions with context maintenance. Focus on task completion rather than individual response accuracy. |

| AI Workflows | Automated data analysis, multi-step processing | Accuracy at each process step and proper error propagation. Test resilience with low-confidence outputs. Ensure smooth human handoff points when automation reaches its limits. |

| AI Agents | Autonomous decision-making systems | Decision consistency across similar scenarios and boundary compliance. Monitor for unexpected behavior patterns indicating training data issues or environmental changes. |

Integration With QA Workflows

Functional validation integrates into existing QA practices through expanded test case design and automation frameworks that handle non-deterministic outputs.

Test Case Design: Standard test cases focus on input-output pairs. AI test cases focus on task completion scenarios across multiple interaction turns. Instead of “verify button click triggers function X,” design scenarios like “verify user can complete purchase recommendation flow within three interactions.” This requires expanding acceptance criteria to include probabilistic success measures rather than binary pass/fail outcomes.

Let’s examine a practical example to illustrate this shift.

For a standard e-commerce feature, a test case might look like this:

Test Case: Verify Product Filter Function

Given: User selects "Laptops" category and "$1000-$2000" price filter

When: System processes filter selections

Then: Display all laptops priced between $1000-$2000

Success Criteria: Exact product count matches database query resultsWhen we introduce AI-Enhanced Features like smart product recommendations, our test approach must evolve from technical verification to comprehensive goal validation:

Test Case: Smart Product Recommendation Flow

Scenario: User seeking laptop recommendations based on stated needs

Test Steps:

1. User input: "I need a laptop for graphic design work"

2. AI response: [Acknowledge need + ask clarifying questions]

Expected elements: Budget inquiry, performance requirements, usage context

3. User input: "Budget around $2000, need good graphics card"

4. AI response: [Recommend 2-3 specific laptops with rationale]

Expected elements: Specific models, graphics capabilities, price points

5. User input: "What about battery life on these?"

6. AI response: [Provide battery specifications for recommended models]

Expected elements: Specific battery data for previously mentioned laptops

Primary Success Criteria:

- User Goal Achievement: User receives actionable laptop recommendations suitable for graphic design

- Contextual Accuracy: AI maintains focus on graphic design requirements throughout conversation

- Information Quality: All recommended products exist, are available, and meet stated criteria

- Conversation Flow: Natural progression from need identification to specific recommendations

Measurable Acceptance Thresholds:

- Recommendation Relevance: ≥90% of suggested laptops meet user's stated requirements (graphic design capability, $2000 budget)

- Context Retention: AI references graphic design context in 100% of follow-up responses

- Product Accuracy: Recommended laptops exist in inventory and pricing is accurate within 5%

- User Progression: ≥75% of users click through to view at least one recommended product

- Task Completion: ≥80% of users report understanding their laptop options after the interaction

Advanced Validation Scenarios:

- Context Switching: User changes requirements mid-conversation ("Actually, I need it for gaming too")

- Ambiguous Input: User provides unclear budget ("not too expensive")

- Edge Case Handling: No products match exact criteria (budget too low for graphic design needs)

- Follow-up Complexity: User asks detailed technical questions about recommended productsThis approach requires test data that goes beyond standard boundary values to cover diverse conversation patterns.

Automation Framework Adaptation: Existing automation frameworks need semantic similarity evaluation instead of exact output matching. Implement assertion libraries that can evaluate whether AI responses are semantically equivalent to expected outcomes. This means training your automation to understand that “The weather is sunny today” and “Today’s forecast shows clear skies” convey the same information.

Context-Aware Performance Measurement: Typical performance testing measures speed and reliability. AI functional testing adds accuracy across different contexts, user segments, and input types. A customer service AI might excel with billing questions but struggle with technical support issues. Your testing framework needs to bucket results across these different contexts to identify performance gaps.

Edge Case Discovery: Manual boundary testing becomes inadequate for AI systems operating in high-dimensional input spaces. Implement property-based testing that automatically generates diverse inputs and validates that certain properties hold. Use adversarial testing techniques to discover inputs that cause AI failures, similar to security penetration testing but focused on functional breakdowns.

Load and Accuracy Correlation: Unlike traditional systems that fail predictably under load, AI systems might maintain response times while silently degrading output quality. Your load testing needs to monitor accuracy metrics alongside performance metrics, detecting scenarios where the system appears healthy but delivers poor user experiences.

4. Ethical And Safety Assurance – “Is It Safe And Fair?”

Ethical and safety testing addresses risks that standard software doesn’t face: algorithmic discrimination, harmful content generation, and decision transparency failures. This testing dimension focuses on social impact rather than technical functionality, but uses familiar systematic evaluation approaches.

Risk Assessment By AI Type

| AI Type | Risk Profile | Key Testing Focus |

| AI-Enhanced Features | Lower risk – augments rather than replaces human decision-making | Biased recommendations, discriminatory filtering, filter bubbles that reinforce existing biases |

| AI-First Applications | Higher risk – direct user interaction and content generation capabilities | Harmful content generation, stereotype perpetuation, dangerous advice, copyright issues, misinformation |

| AI Workflows | Variable risk – depends on workflow impact (e.g., job applications vs. email organization) | Decision points affecting protected characteristics, transparency for human oversight and intervention |

| AI Agents | Highest risk – autonomous decision-making without human oversight | Boundary compliance, escalation mechanisms for edge cases, bias amplification prevention |

Systematic Bias Detection

Bias testing applies comparative testing methodologies across demographic groups rather than across platforms or browsers. This includes systematic evaluation of AI performance across common domains with known bias risks:

- Healthcare: Test for gender, race, and age bias in symptom recognition and treatment recommendations that might perpetuate existing healthcare disparities

- Finance: Evaluate for redlining proxies, gender bias in credit assessments, and income-based discrimination that could restrict financial access

- Hiring: Detect gender bias in technical role evaluations, name-based discrimination, and qualification assessment disparities across demographic groups

Create test datasets that represent diverse user populations and measure AI system outcomes across protected characteristics like gender, race, age, and disability status.

Implement A/B testing approaches that compare AI behavior between demographically similar users to identify discriminatory patterns. This requires the same statistical rigor applied to performance testing but focuses on fairness metrics rather than speed measurements. Test for intersectional bias, systems might treat individual groups fairly while discriminating against specific combinations.

Content Safety And Harm Prevention

Safety testing systematically probes for harmful outputs using red team exercises similar to security penetration testing. Instead of looking for SQL injection vulnerabilities, test for prompt injection attacks that manipulate AI behavior to generate inappropriate content.

Develop test suites that attempt to generate harmful outputs including hate speech, dangerous instructions, privacy-violating information, or misinformation. Use both automated tools and human evaluation to identify safety gaps across diverse contexts and user scenarios.

Integration With QA Workflows

Expanding Quality Gates: Standard quality gates block releases for technical failures. Ethical AI testing adds bias thresholds, safety violation detection, and transparency requirements to release criteria. Instead of just asking “Does it work?” quality gates now ask “Does it work fairly and safely for all users?”

Documentation and Audit Trails: Extend existing test documentation to include bias testing results, safety validation evidence, and explainability assessments. This builds on familiar compliance testing approaches but focuses on AI-specific requirements. Model cards document AI system capabilities and limitations similar to existing system requirements documentation.

Regulatory Compliance Integration: Emerging AI regulations like the EU’s AI Act require systematic testing documentation similar to existing compliance requirements for healthcare, finance, or accessibility. Apply familiar regulatory testing processes to AI-specific requirements, maintaining the same documentation standards and audit trail practices.

Stakeholder Communication: Bias and safety issues require explaining statistical concepts and ethical considerations to business stakeholders who may not understand these intuitively. This builds on existing risk communication skills but requires translating fairness metrics and safety assessments into business impact terms.

5. Operational Resilience – “Will It Keep Working?”

Operational resilience for AI systems extends traditional monitoring and incident response to handle unique failure modes: model drift, accuracy degradation, and performance changes that occur gradually rather than through discrete failures. AI systems can maintain technical availability while silently degrading in ways that standard monitoring doesn’t detect.

Monitoring Strategy By AI Type

| AI Type | Monitoring Focus | Key Indicators |

| AI-Enhanced Features | Recommendation relevance, search quality, user engagement alongside usual metrics | Click-through rates, user satisfaction scores, conversion metrics |

| AI-First Applications | Conversation quality, content appropriateness, task completion rates | Conversation abandonment rates, user satisfaction feedback, escalation to human support |

| AI Workflows | Accuracy and processing time at each step, error propagation between components | Checkpoint validation of intermediate results, cascade failure detection |

| AI Agents | Decision quality, boundary compliance, autonomous operation | Decision pattern drift, parameter compliance, instances requiring human review |

Drift Detection And Response

Model performance naturally degrades as real-world data distributions change. Implement statistical monitoring that clearly differentiates between normal AI system variation and meaningful performance changes requiring intervention.

Use statistical process control to identify significant changes in feature distributions that may indicate model drift. Differentiate between drift types: feature drift (affecting input variable distributions), concept drift (changing relationships between inputs and outputs), and label drift (affecting target variable distributions). Each type requires a specific response strategy.

Build adaptive monitoring systems that account for seasonal patterns and expected business variations. Many metrics follow weekly, monthly, or seasonal cycles that shouldn’t trigger false alerts. Configure your monitoring to recognize these normal patterns while still detecting genuine performance degradation. Depending on your system’s criticality and data volatility, you may need monitoring frequencies ranging from hourly tests to weekly or monthly evaluations.

Performance And Cost Optimization

AI workloads have unique scaling characteristics requiring specialized performance testing. Test for accuracy under load since AI systems might maintain response times while degrading output quality when overwhelmed. Some AI workloads benefit from horizontal scaling while others require powerful single machines with specialized hardware.

Implement cost-performance optimization testing that balances computational expenses with accuracy requirements. AI systems often have configurable performance-accuracy trade-offs through model size, processing depth, or confidence thresholds. Test these configurations under realistic load patterns to optimize for business objectives.

Integration With QA Operations

Incident Response Expansion: Existing incident response procedures handle technical failures effectively but need expansion for AI-specific incidents like bias emergence, accuracy degradation, or safety violations. Develop escalation procedures that can quickly engage domain experts and stakeholders when AI systems behave unexpectedly.

Continuous Feedback Integration: AI systems improve through user feedback integration, requiring ongoing validation of learning mechanisms. Test active learning implementations that use feedback to identify valuable training examples. Validate that feedback processing pipelines filter noise while incorporating genuine improvement signals.

Rollback and Recovery Procedures: AI system rollbacks need triggers based on accuracy degradation and fairness violations rather than just technical failures. Test automated retraining and model update procedures using systematic validation approaches. Ensure backup models can maintain service quality when primary models need emergency replacement.

Data Pipeline Resilience: AI systems depend heavily on data pipeline reliability for both training and inference. Extend existing data monitoring to include feature consistency validation, training-serving skew detection, and data quality regression testing. Monitor for subtle data changes that might not cause immediate technical failures but degrade model performance over time.

6. Implementation Roadmap And Organizational Considerations

Successful AI testing implementation requires a strategic approach that builds on existing infrastructure while introducing AI-specific capabilities.

Infrastructure Strategy By AI Implementation Scale

| Implementation Scale | Testing Approach | Key Implementation Focus |

| Pilot Projects and AI-Enhanced Features | Start with low-risk AI features where standard testing can be gradually extended | Basic statistical validation alongside existing automation frameworks; features like smart search or content recommendations |

| Production AI-First Applications | Dedicated testing infrastructure for probabilistic outputs and continuous model evaluation | Extended CI/CD pipelines with model artifacts, statistical validation gates, and bias detection; comprehensive monitoring systems |

| Enterprise AI Workflows | Sophisticated testing orchestration for multi-step processes with multiple AI components | Testing environments simulating complex data flows; frameworks isolating issues to specific workflow stages |

| Autonomous AI Agents | Comprehensive testing infrastructure including simulation environments | Testing frameworks validating agent decision-making across diverse scenarios while ensuring defined parameter operation |

Tool Selection and Integration Strategy

Choose AI testing tools that integrate seamlessly with existing development ecosystems rather than requiring parallel infrastructure. TensorFlow Extended (TFX) works effectively for teams already using TensorFlow, providing ML pipeline management with data validation and model analysis capabilities that extend current CI/CD workflows.

MLflow offers experiment tracking and model registry with broad framework support, allowing teams to maintain existing tool preferences while adding AI-specific capabilities. The platform extends current test reporting mechanisms while providing flexible deployment options that work with established infrastructure.

For teams requiring specialized capabilities, custom testing frameworks are an option. However, these frameworks demand significant development effort and ongoing maintenance. QA teams without dedicated MLOps resources or those wanting to avoid the overhead of custom framework development should consider an integrated testing platform instead. Managed solutions simplify the process by eliminating the need to coordinate separate tools for data versioning, model analysis, and semantic validation. This allows QA professionals to focus on strategic testing rather than infrastructure management.

Scaling Your Practice

Organizational Change Management: Communicate benefits clearly, address resistance through education, and demonstrate quick wins. When your pilot project catches a bias issue or when monitoring detects model drift early, use those success stories. Convert skeptics into advocates through hands-on experience.

Process Integration: Embed AI QA into existing workflows rather than creating separate processes. Simply expand current quality gates with additional criteria.

Knowledge Transfer: Combine formal training with hands-on application and create communities of practice for knowledge sharing. This builds organizational best practices for AI use while helping skeptics see the actual value delivered.

AI QA builds upon familiar roles and responsibilities:

- Skill Development: Existing QA professionals can excel at testing AI features with focused training in statistical concepts and bias detection. Embed specialists within ML teams while maintaining centralized standards.

- New Specializations: Introduce AI-specific roles gradually, such as ML Test Engineers, AI Ethics Officers, and Data Quality Engineers who apply their existing skills to new AI contexts.

- Cross-Functional Collaboration: AI QA requires closer collaboration between QA and development teams. Establish embedded QA specialists within ML teams while maintaining centralized standards.

7. Conclusion

Organizations mastering QA for AI gain significant competitive advantages as AI becomes standard across software applications.

The payoffs include faster time-to-market for AI features, higher user satisfaction, lower operational costs, and better regulatory compliance. Companies with mature AI QA practices see measurably better outcomes.

The question isn’t whether these capabilities are needed, it’s whether they’ll be developed proactively or reactively. The choice made today determines whether organizations lead this transformation or follow it.

Your Next Steps

- Immediate Actions (Next 30 Days): Assess current capabilities using this framework. Identify team members with relevant skills for AI QA roles. Select pilot projects demonstrating value while providing learning opportunities.

- Medium-Term Goals (3-6 Months): Implement comprehensive QA on pilot projects using the three-pillar framework. Integrate into existing workflows through expanded quality gates. Measure and document improvements.

- Long-Term Vision (6+ Months): Scale AI QA capabilities across relevant projects and teams. Establish organizational standards. Build internal expertise through training and collaboration.