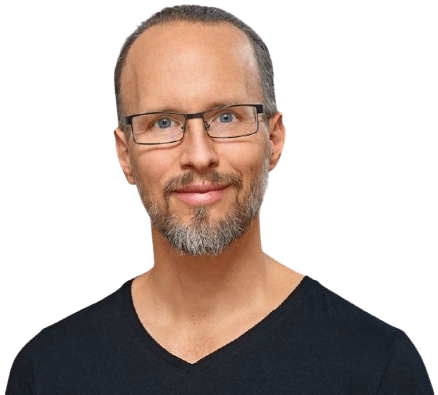

This talk explores the challenges of testing agentic AI systems—AI that autonomously reacts to events and initiates processes. Drawing on decades of experience, Robert Sabourin emphasizes that testing begins and ends with risk. A three-dimensional model (business impact, technical risk, autonomy) guides evaluation. Testers generate ideas using a broad taxonomy, from capabilities and failure modes to creative and adversarial approaches. Continuous testing and monitoring ensure findings inform business decisions, emphasizing learning over correctness.

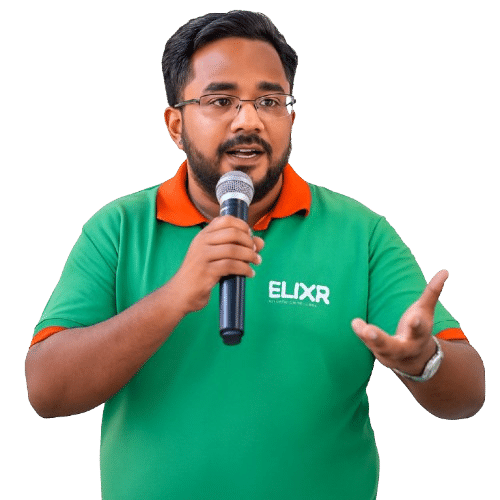

“Test Tribe are the Backend Peoples(DB Layer) who is helping the Peoples(UI Layer) , via Communication with API Layer(The Speakers).” This session has made a difference in the “Perception” with the atomic talks. As Atomic Talks increased and as more and more information was feed into my mind, i reliazed, my Mind Started to Scale , like AI Learning, with the Data been Feed. Thank you once again and …. Thats Sums Up for the team!